Finding the fastest Python JSON library on all Python versions (8 compared)

Average reading time: 4 minutes

Note: Hey! I just posted my second article titled: "The Guide to Making Your Django SaaS Business Worldwide (for free)". Would really appreciate your thoughts on it!

Handling JSON data is a fundamental task. As the popularity of JSON has grown, so has the number of libraries. But which library is the fastest?

In this article, I will benchmark the eight Python JSON libraries, which are:

- orjson: ijl/orjson

- pysimdjson: TkTech/pysimdjson

- yapic.json: zozzz/yapic.json

- simplejson: simplejson/simplejson

- ujson: ultrajson/ultrajson

- python-rapidjson: python-rapidjson/python-rapidjson

- cysimdjson: TeskaLabs/cysimdjson

- nujson (deprecated): caiyunapp/ultrajson

Most benchmarks, like the one you are reading, only include four JSON libraries, usually the standard library’s JSON, orjson, ujson, and rapidjson. However, thanks to my maximalist nature, I decided to go much further and find all the JSON libraries that are somehow usable and test them out. This is how I found some “new players”, such as cysimdjson, yapic.json, or pysimdjson.

Additionally, to increase the complexity of my benchmark, I will run each test on various Python versions to determine if there are any differences across the language versions. After all, as developers, we do enjoy adding layers of complexity to everything, don't we?

I will run benchmarks on each Python version, from the good old 3.8 to the not-yet-released 3.13. This will allow us to see if there are any performance differences across different Python versions (which, of course, are none).

It’s time to make your bets.

What is your guess: What JSON library will be the fastest one?

Methodology

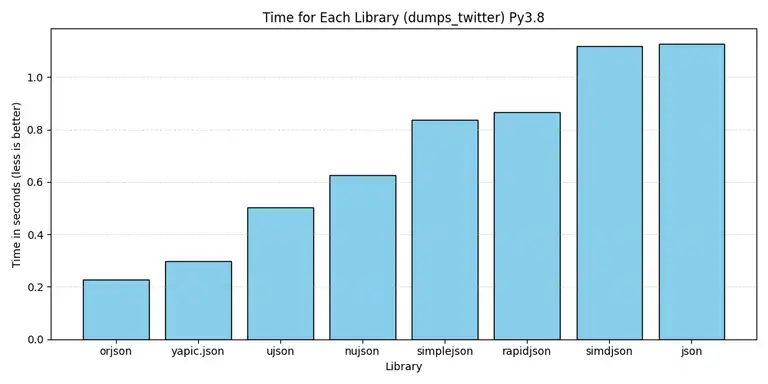

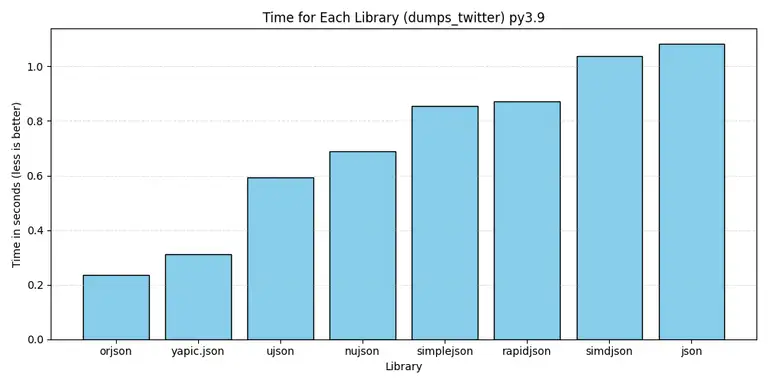

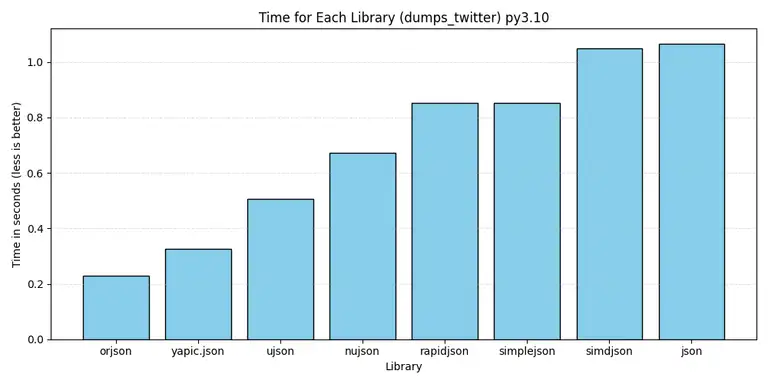

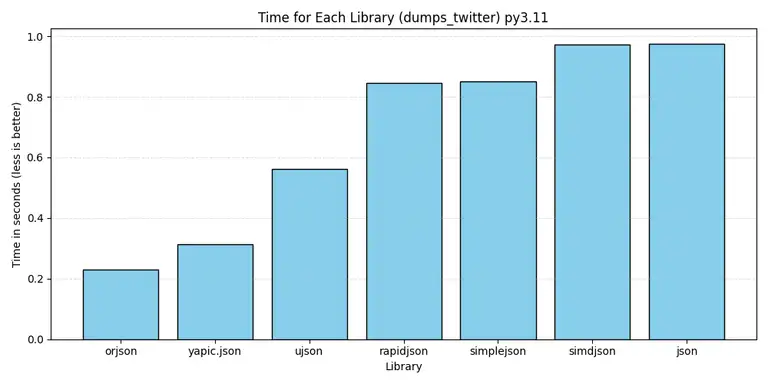

I will perform the benchmarking tests on the following Python versions: 3.8, 3.9, 3.10, 3.11, 3.12, and 3.13 — with each Python version being the latest available for the given version.

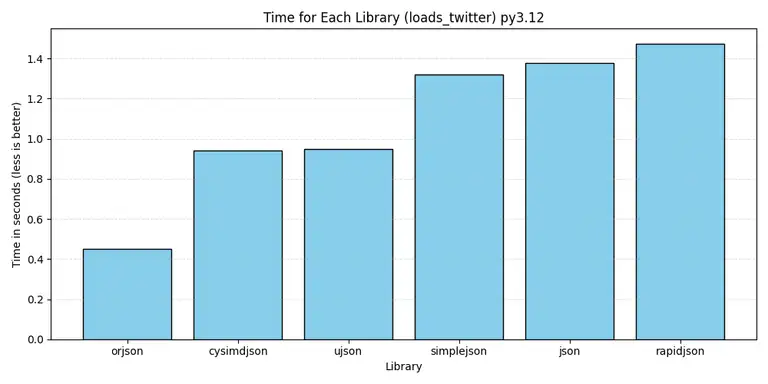

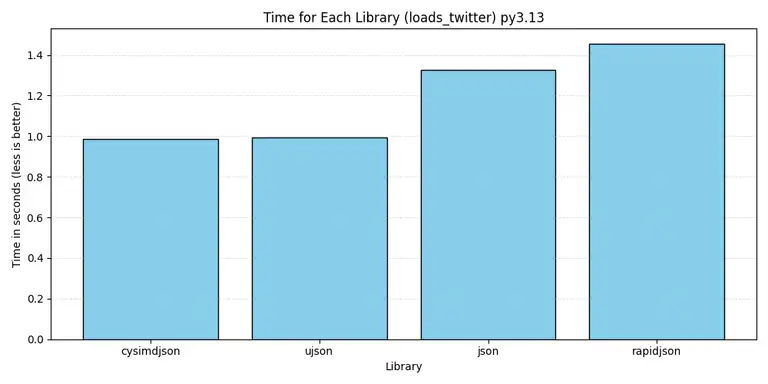

For each library, I will measure the loads and dumps operations based on four different JSON files, all proudly copied from Orjson's benchmarking code (once again, just copy-pasting from their repo):

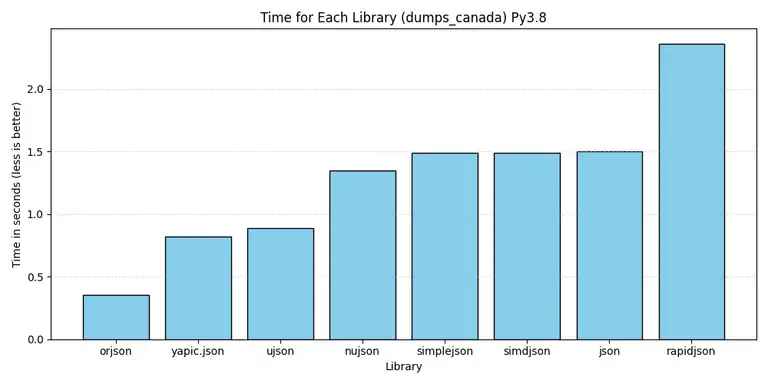

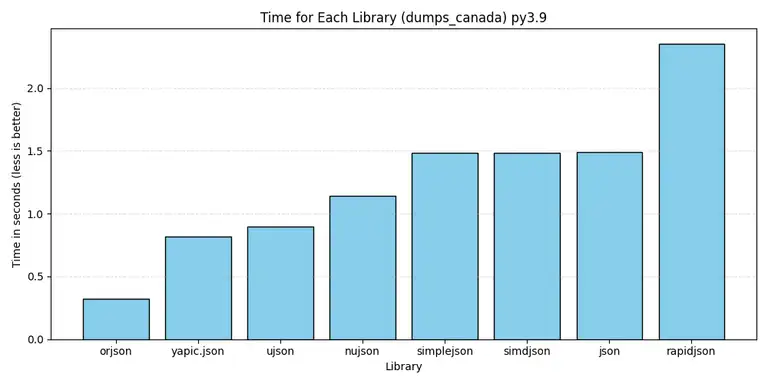

twitter.json, 631.5KiB: results of a search on Twitter for "一," containing CJK strings, dictionaries of strings, and arrays of dictionaries, indented.github.json, 55.8KiB: a GitHub activity feed, containing dictionaries of strings and arrays of dictionaries, not indented.citm_catalog.json, 1.7MiB: concert data, containing nested dictionaries of strings and arrays of integers, indented.canada.json, 2.2MiB: coordinates of the Canadian border in GeoJSON format, containing floats and arrays, indented.

The benchmarking will be conducted on a Windows 10 system with an Intel i5-8365U processor and 8GB of RAM.

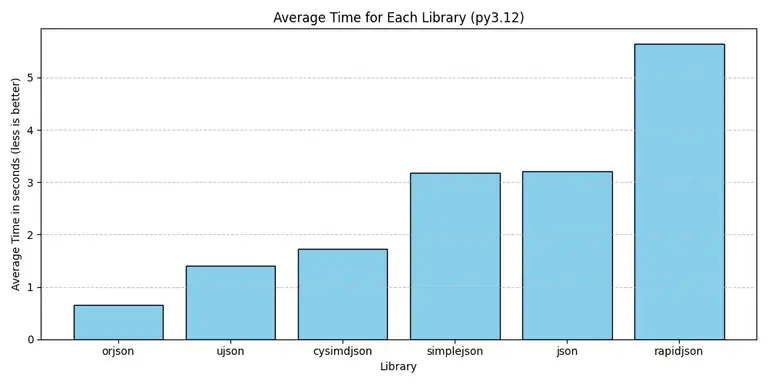

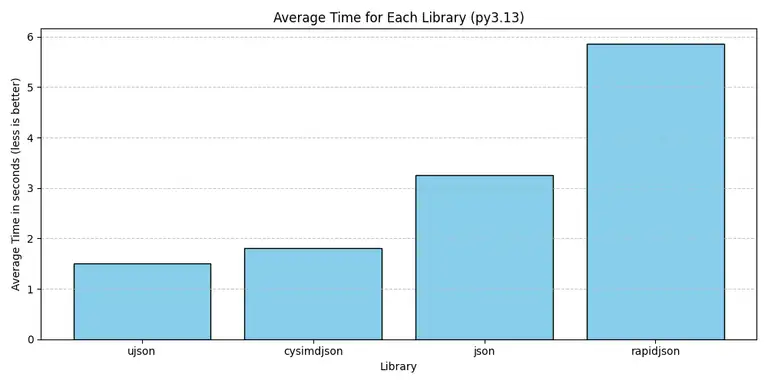

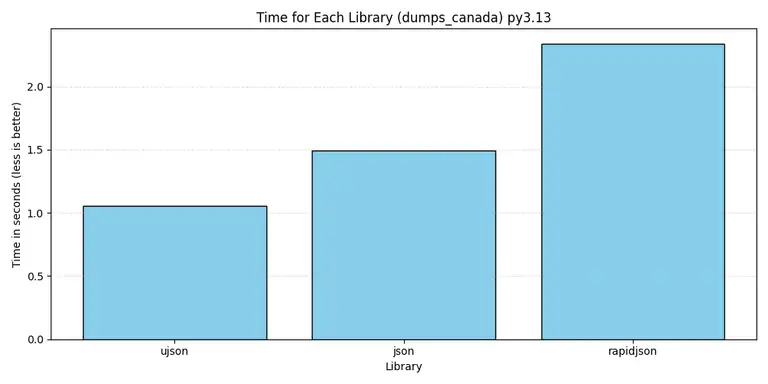

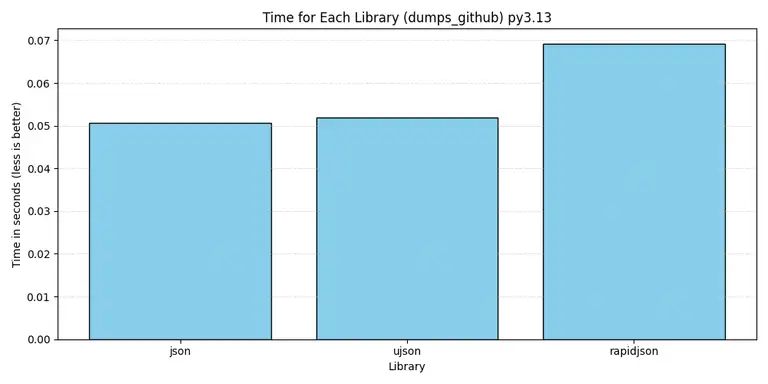

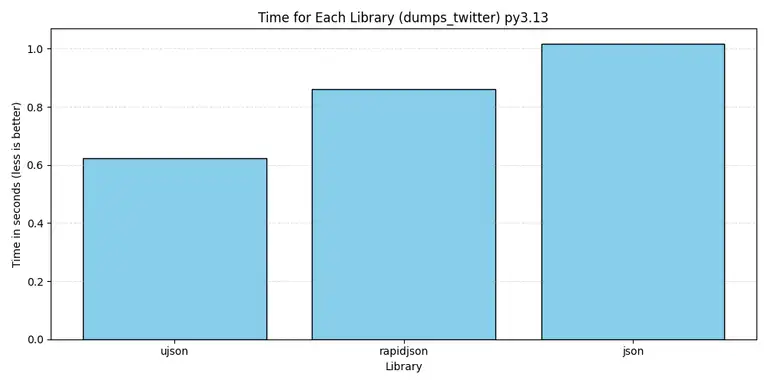

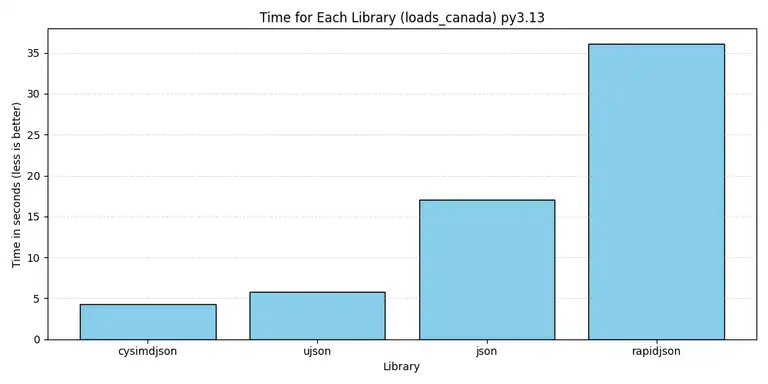

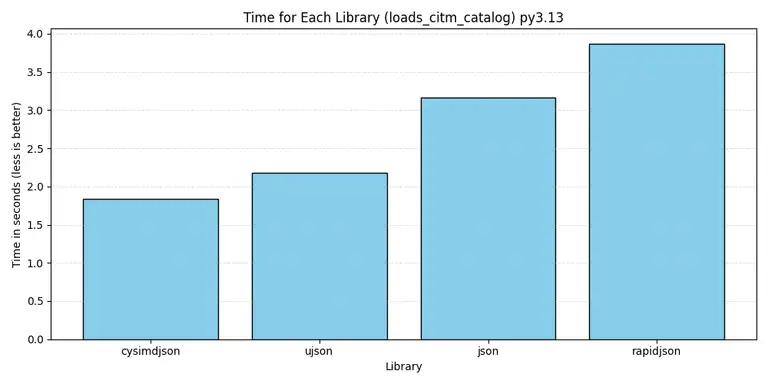

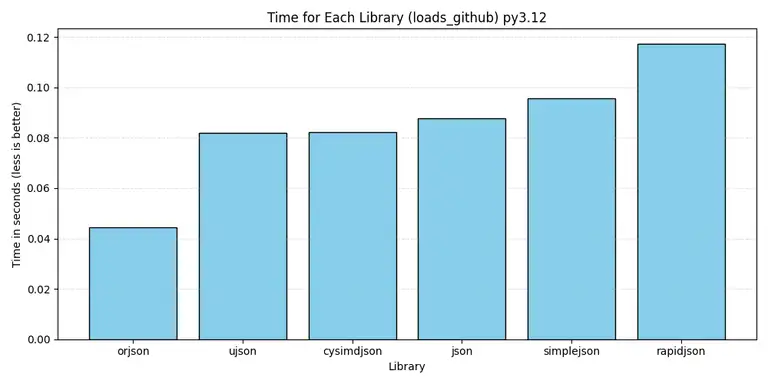

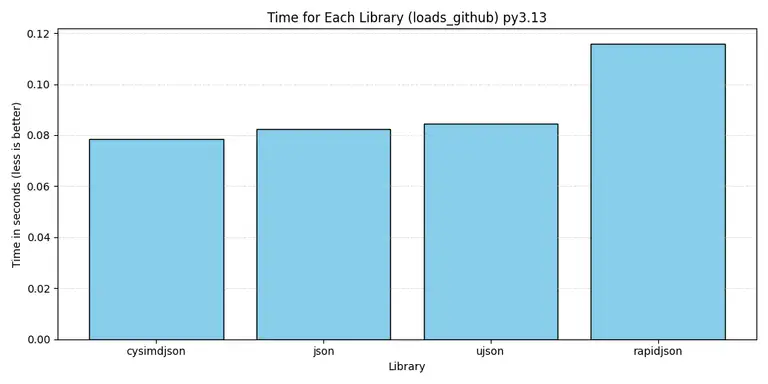

Before we begin, it's important to note that fewer libraries are available for each subsequent Python version, mostly due to compatibility issues. As a result, the newer the Python version is, the fewer libraries are benchmarked. To compare, the older 3.8 has eight libraries in the benchmark, while the pre-release 3.13 has only four libraries benchmarked. I initially wanted to delete 3.13 from the benchmarks and disqualify this version. However, I still included it to at least compare the standard library’s JSON module, and also, why not?

You can get the full code for this benchmark on my GitHub: https://github.com/catnotfoundnear/json_comp/

Some quick results

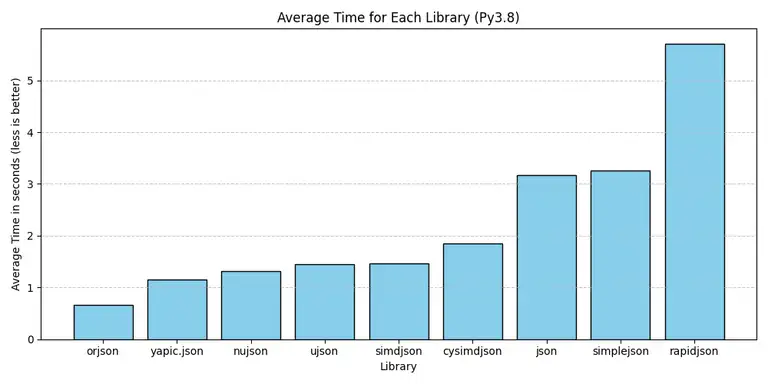

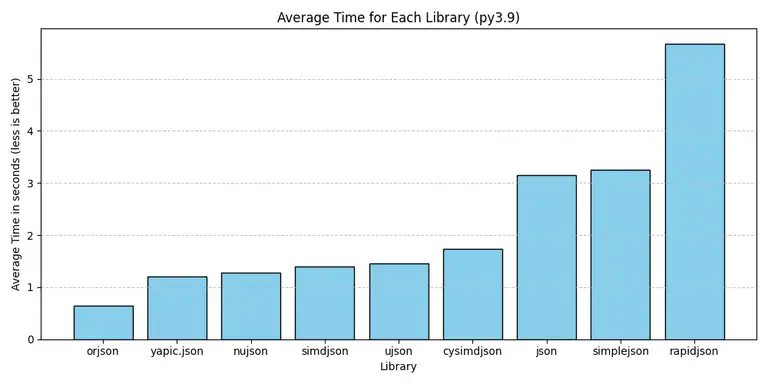

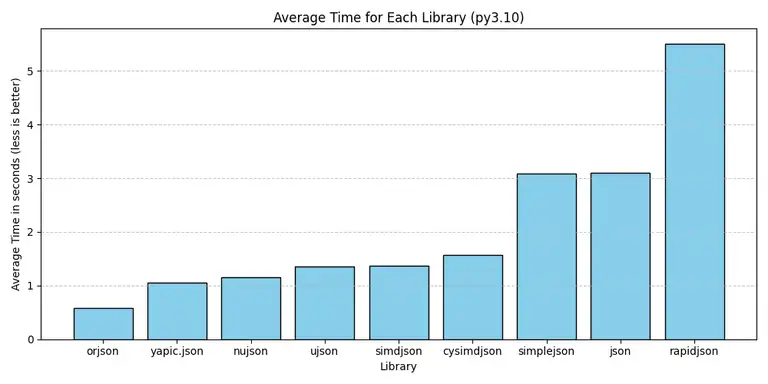

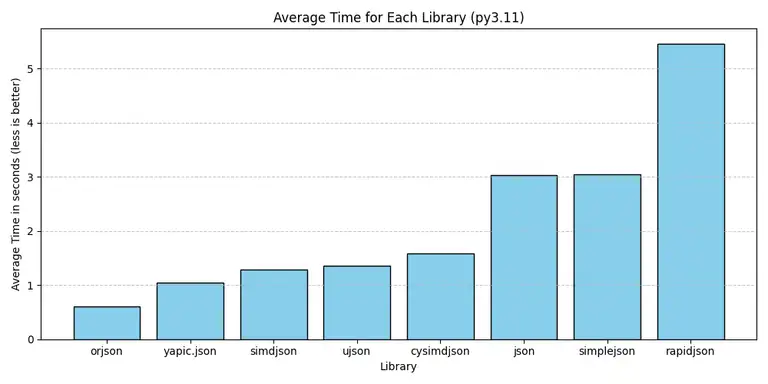

I do not want to trouble you with a long "introduction" or "prologue," or whatever the literature geeks call this thing. Let's move on to the results right away:

The slowest library

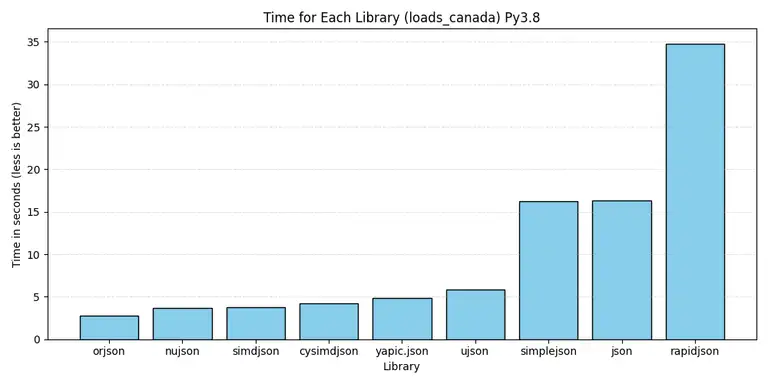

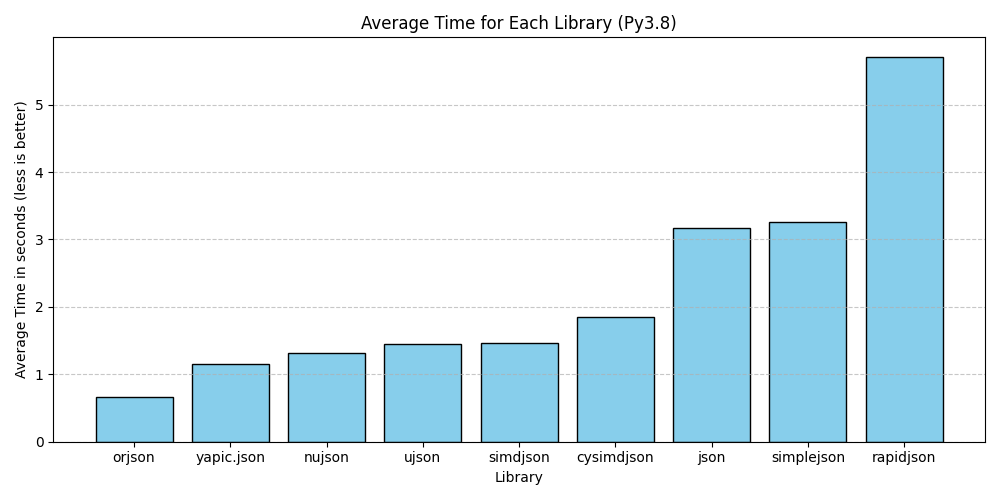

Out of the eight libraries, the slowest one is… Rapidjson.

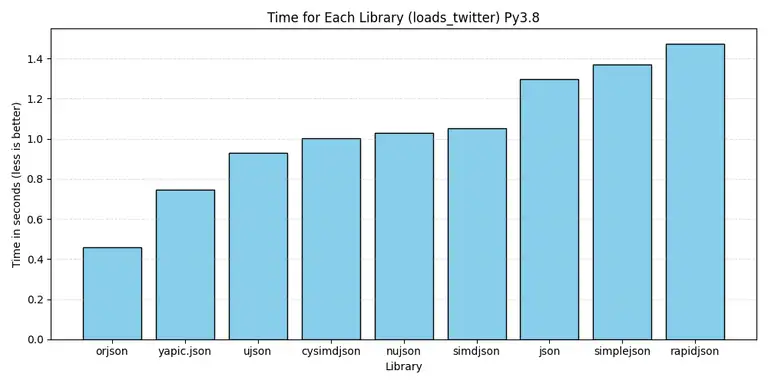

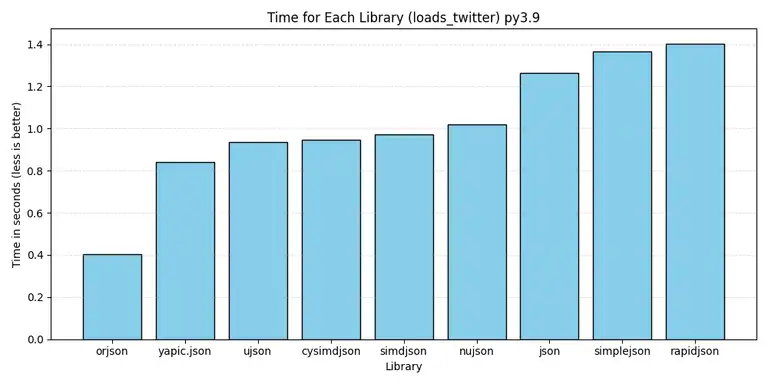

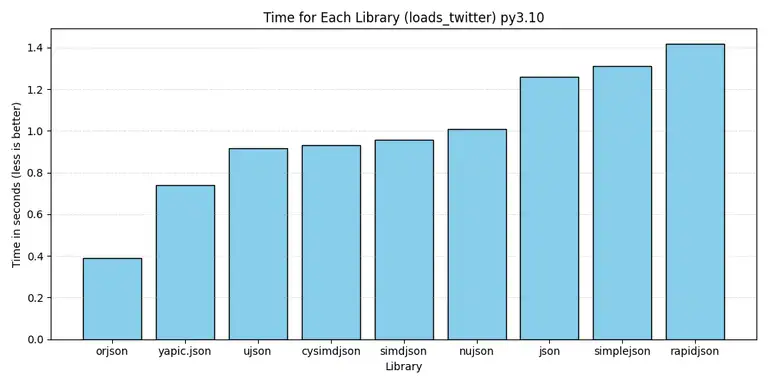

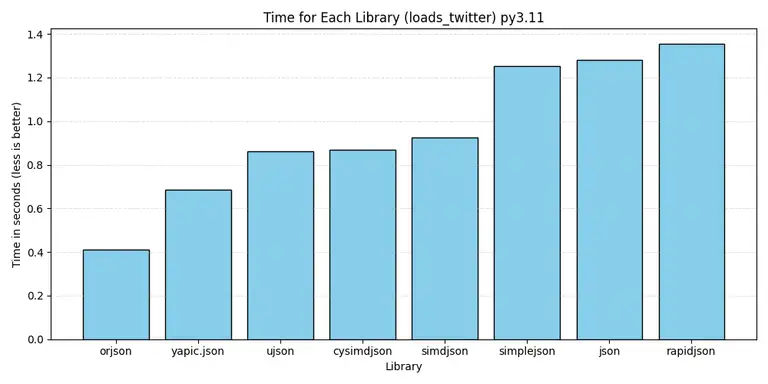

How ironic... And yes, even Python's built-in "JSON" module is faster than Rapidjson — on average, Python’s "JSON" is twice as fast as Rapidjson. This is definitely not what I expected.

The fastest library

Is… orjson!

Orjson turned out to be the fastest library in absolutely every benchmark, across all Python versions. Orjson beats every other library, in both "loads" and "dumps" modes, every time.

The slowest Python

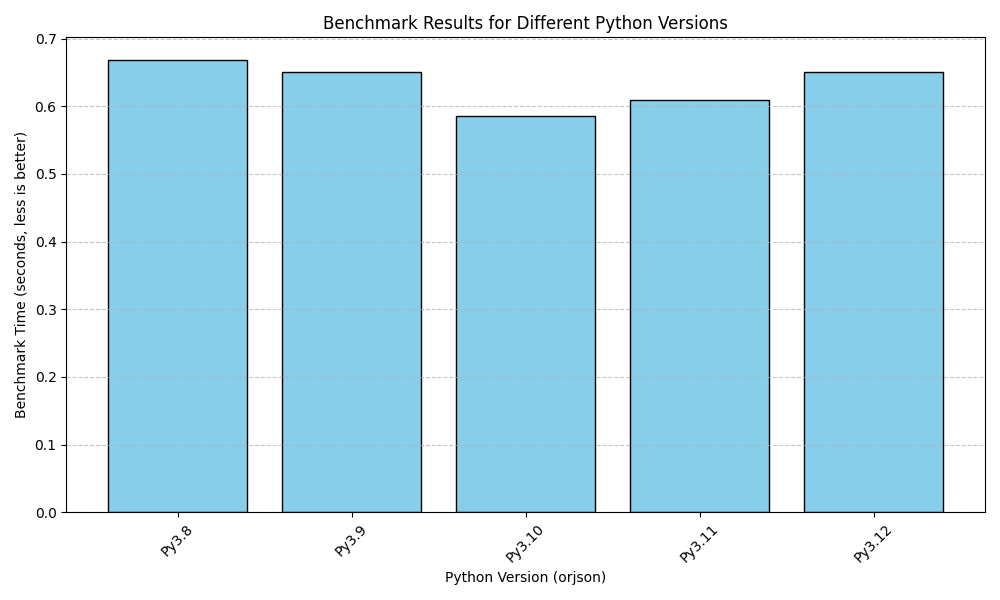

Out of fivetested Python versions, Python 3.8 turned out to be the slowest one, being about 10% slower than the fastest Python version, which turned out to be Python 3.10. Python 3.13 was excluded from this bench, as orjson does not work on it yet.

The full results

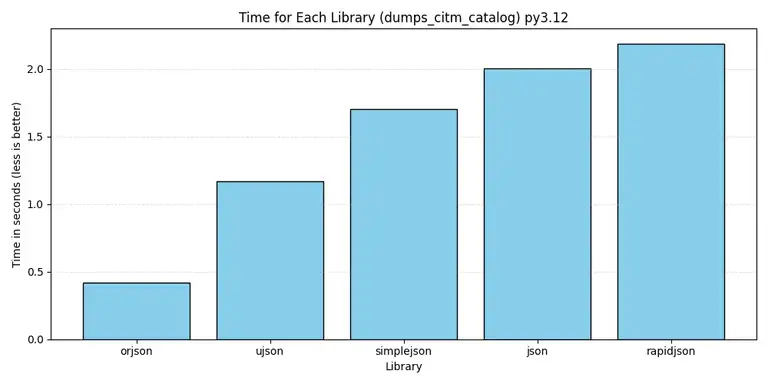

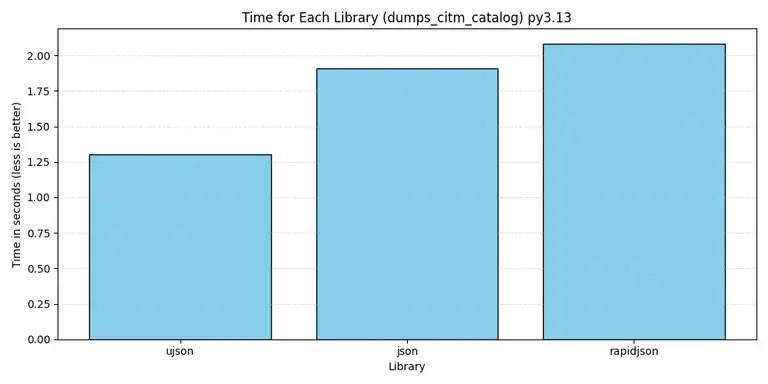

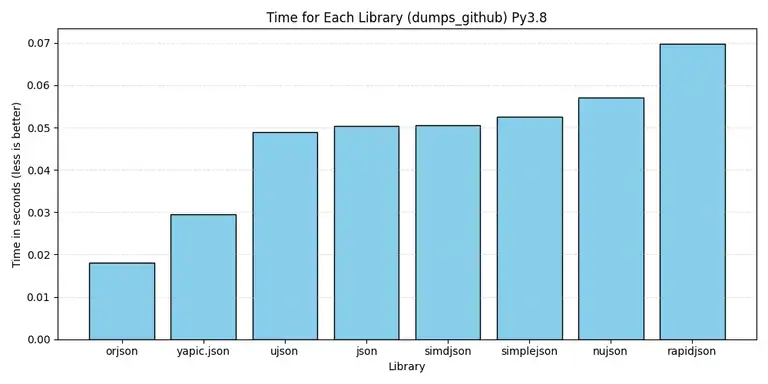

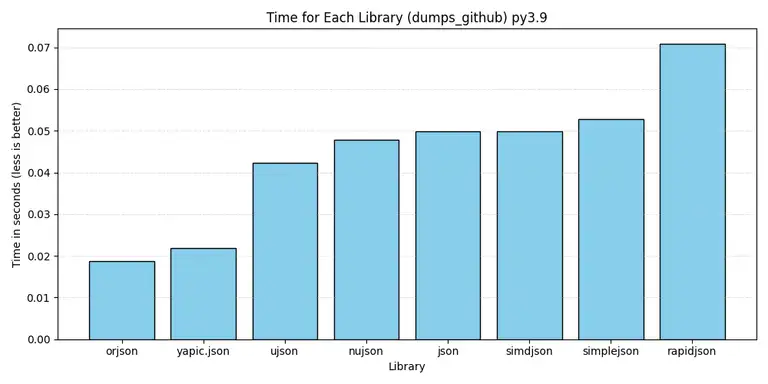

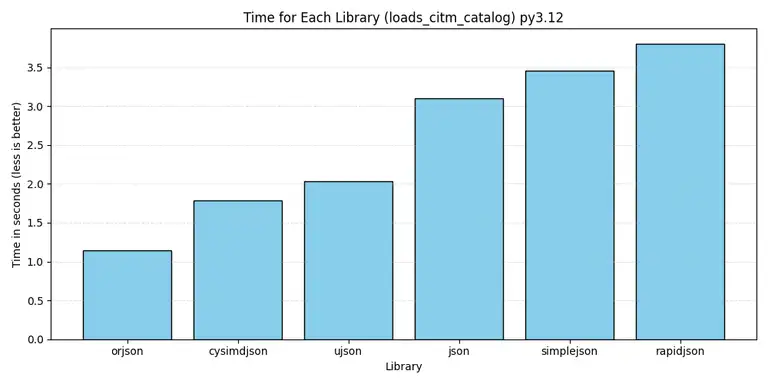

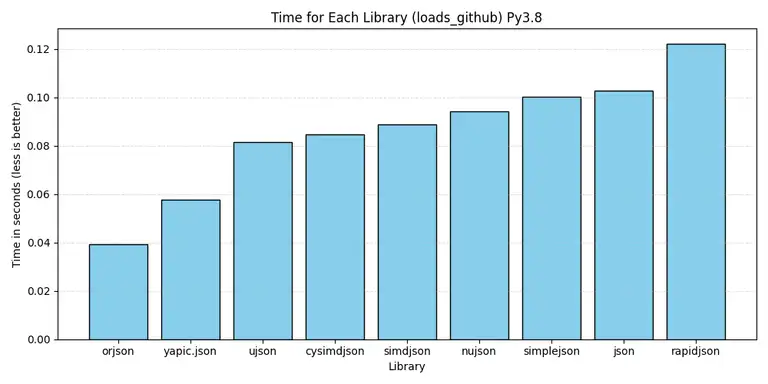

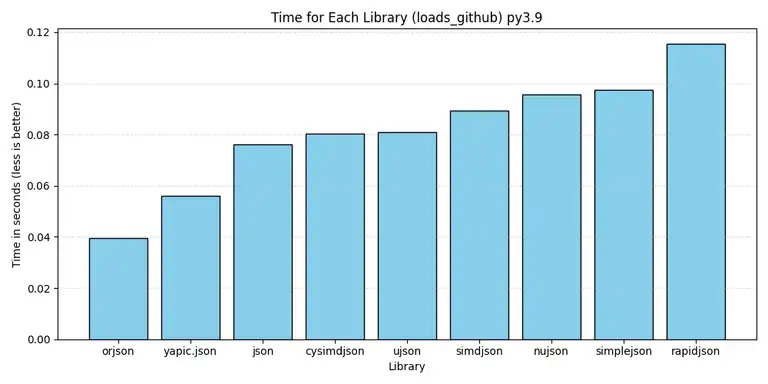

This benchmark has shed light on the performance of different JSON libraries across multiple Python versions. Orjson consistently delivered outstanding results in both parsing and stringifying tasks. Other libraries such as yapic, nujson, ujson also show good results, but they are not close to the outstanding speed of orjson. Now let me show you all 55 charts for your statistical-type viewing pleasure.

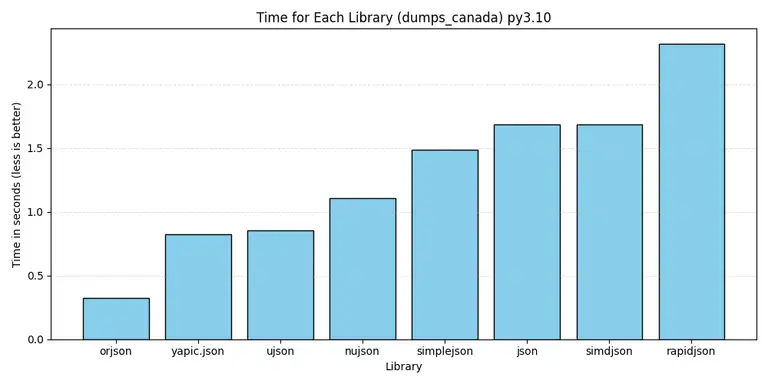

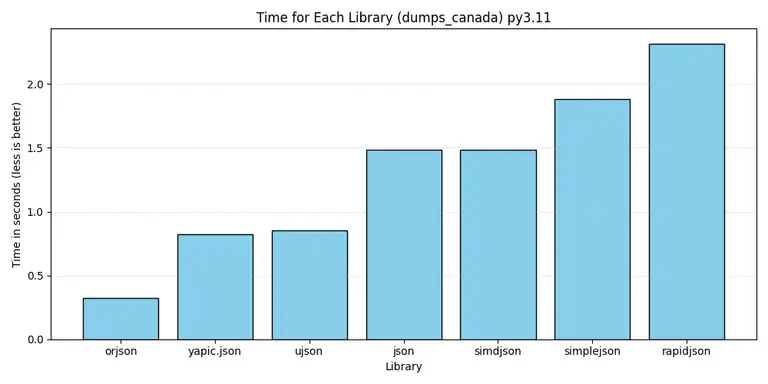

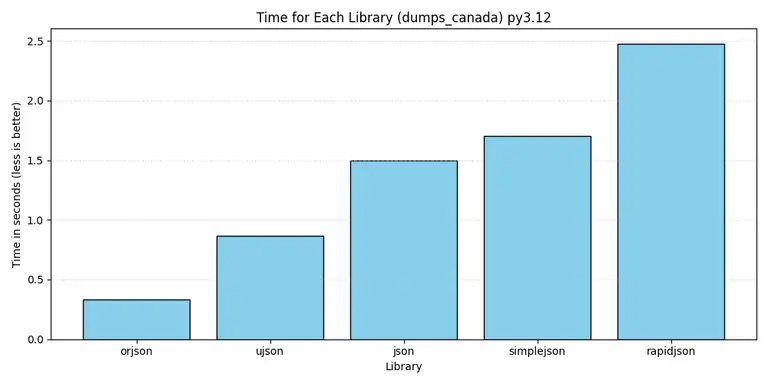

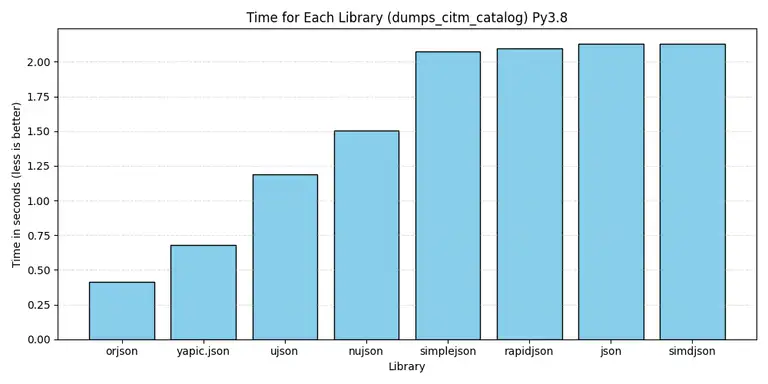

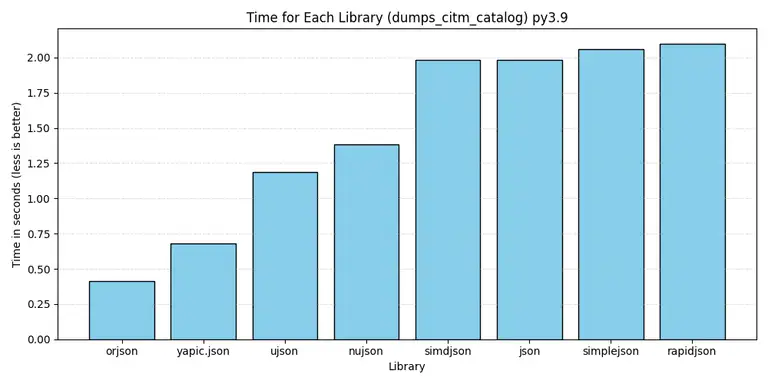

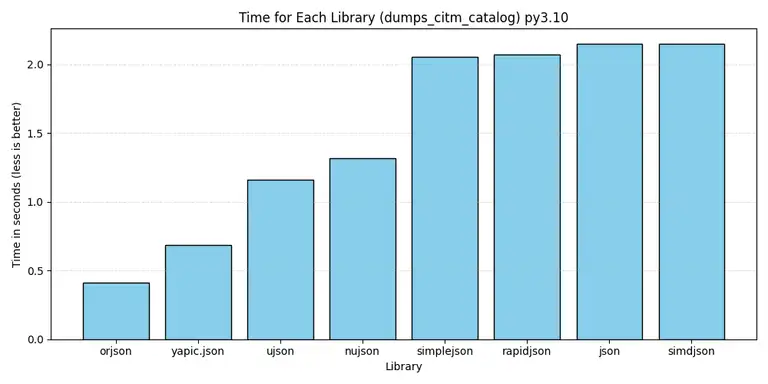

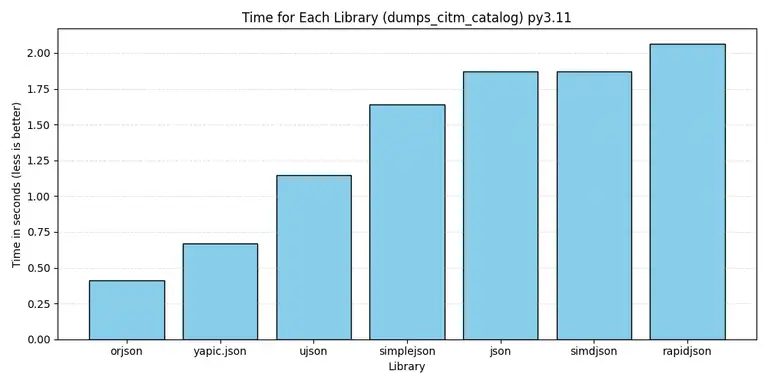

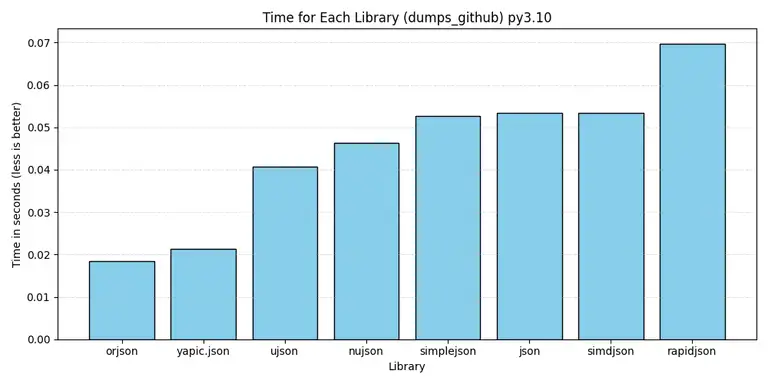

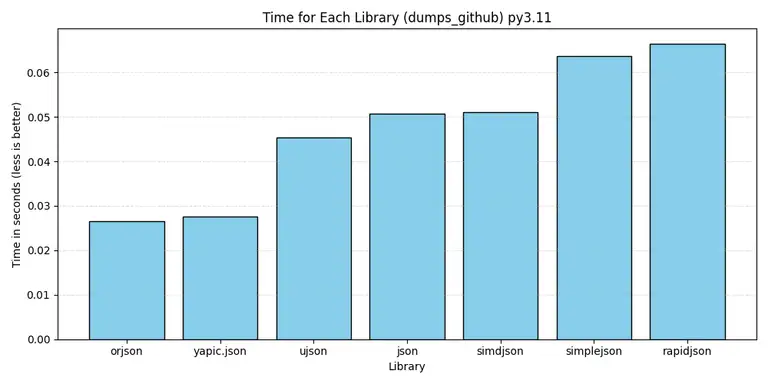

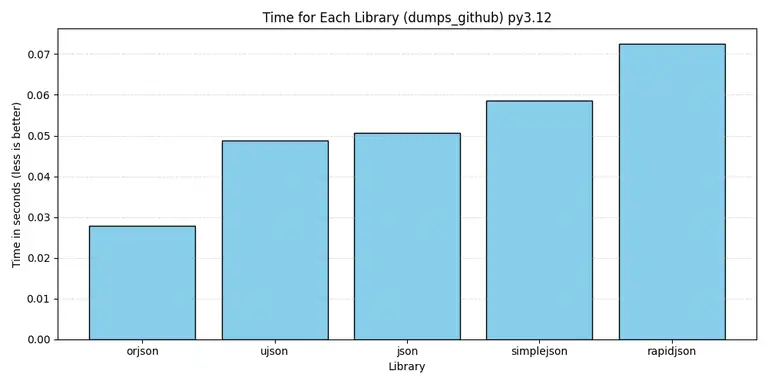

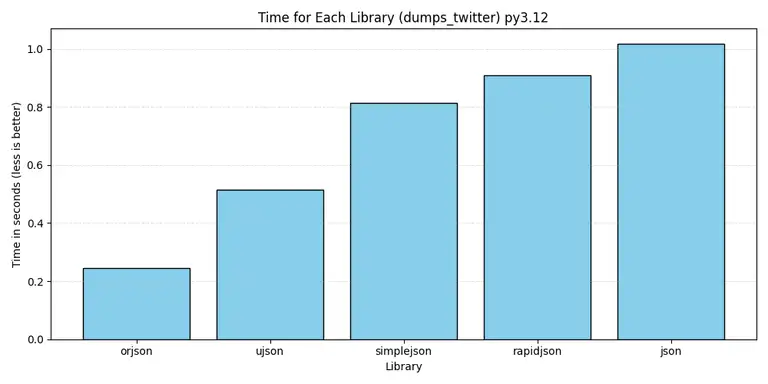

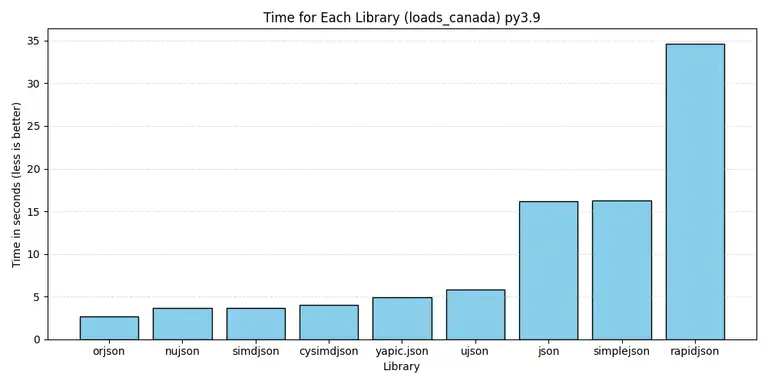

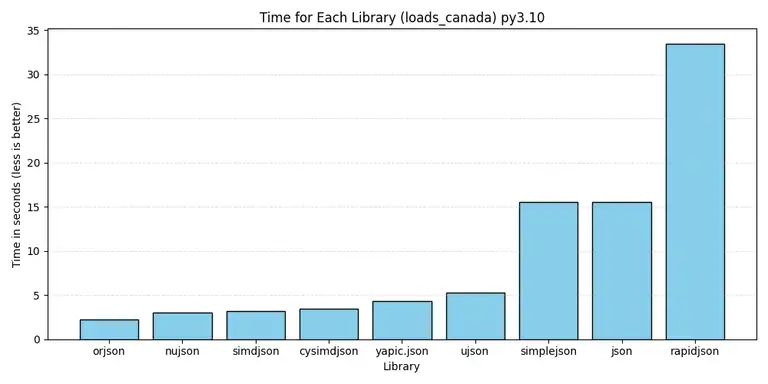

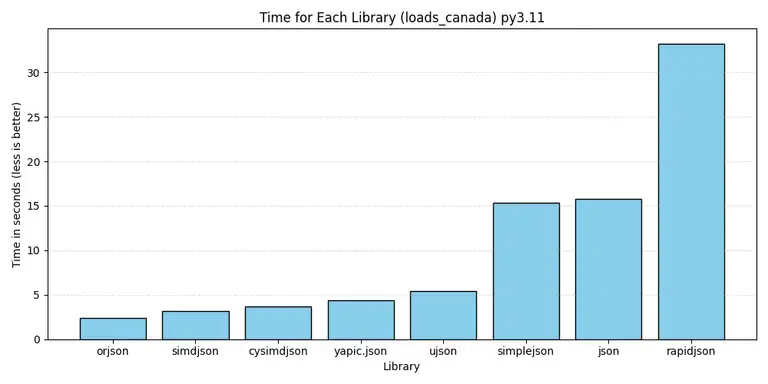

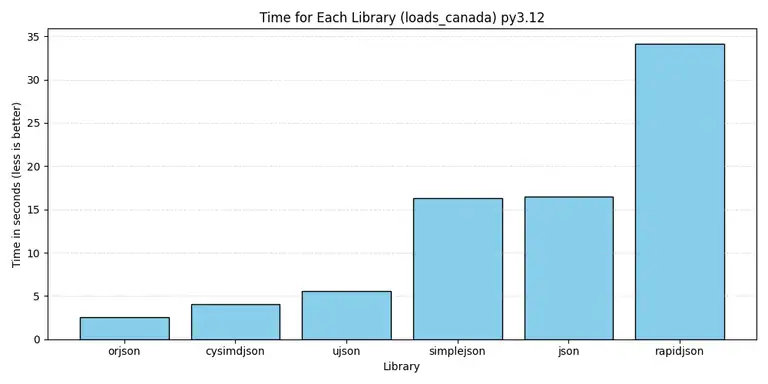

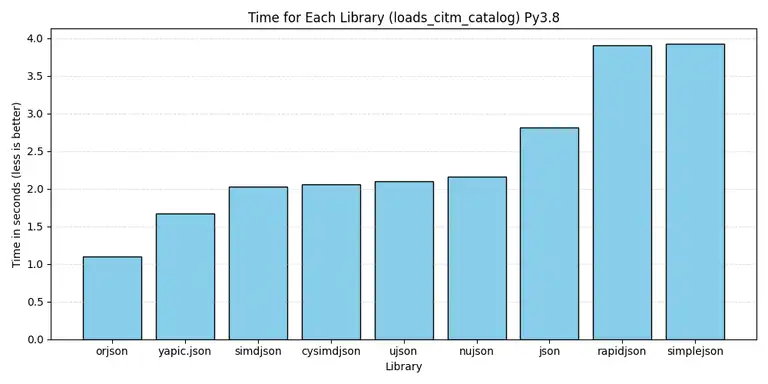

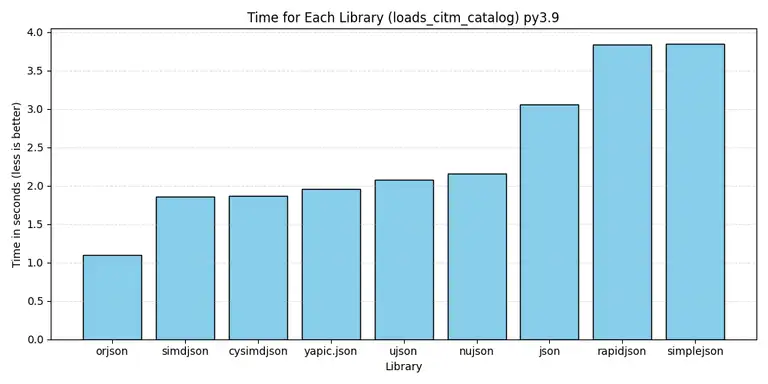

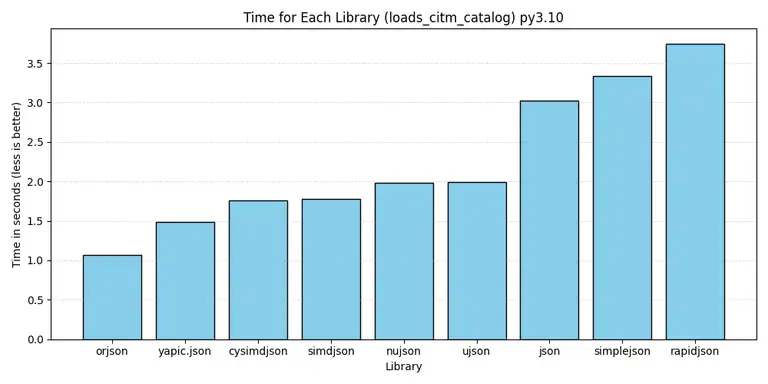

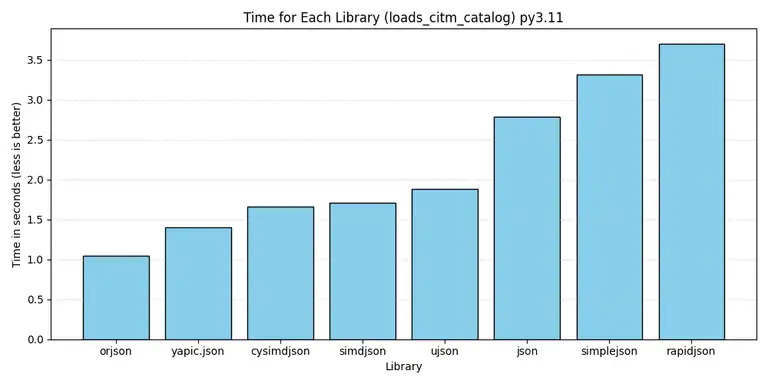

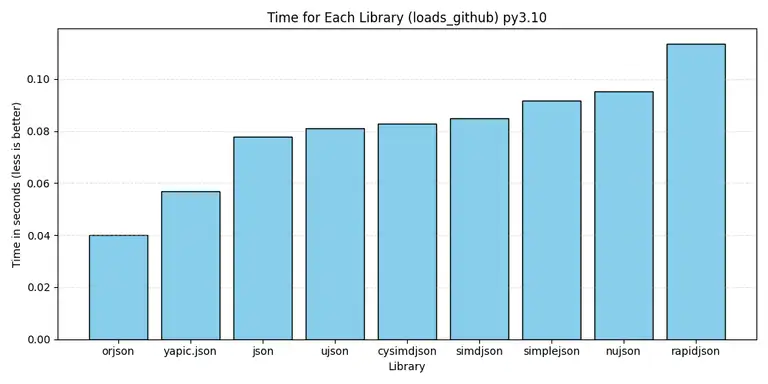

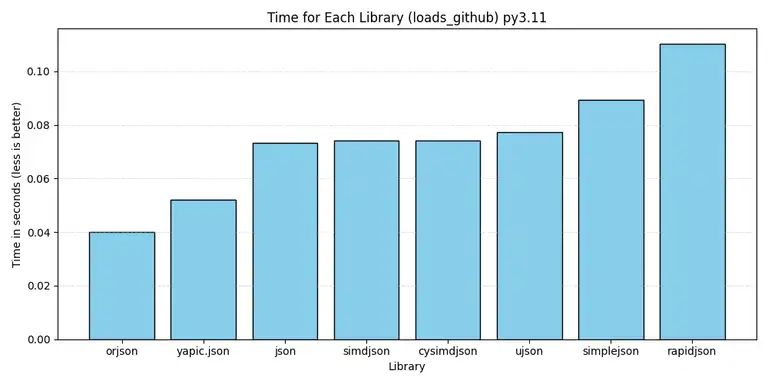

First batch of charts: all JSON libraries for each Python version.

Second batch: those are individual "dumps" benchmarks, across all Python versions, and all JSON libraries.

The last batch of charts: similarly, those are individual "loads" benchmarks, across all Python versions, and all JSON libraries.

Armed with these results, it's clear that orjson stands tall as the go-to library for all time-crucial JSON tasks, on all Python versions, and beats all other JSON libraries. The benchmark not only provides valuable insights for developers but also adds a dash of excitement to the world of Python library comparisons. So, the next time you see yet another JSON benchmark, you know exactly that it needs to include at least 8 libraries, or there won’t be any sense in reading it.

Note: Hey! I just posted my second article titled: "The Guide to Making Your Django SaaS Business Worldwide (for free)". Would really appreciate your thoughts on it!